International

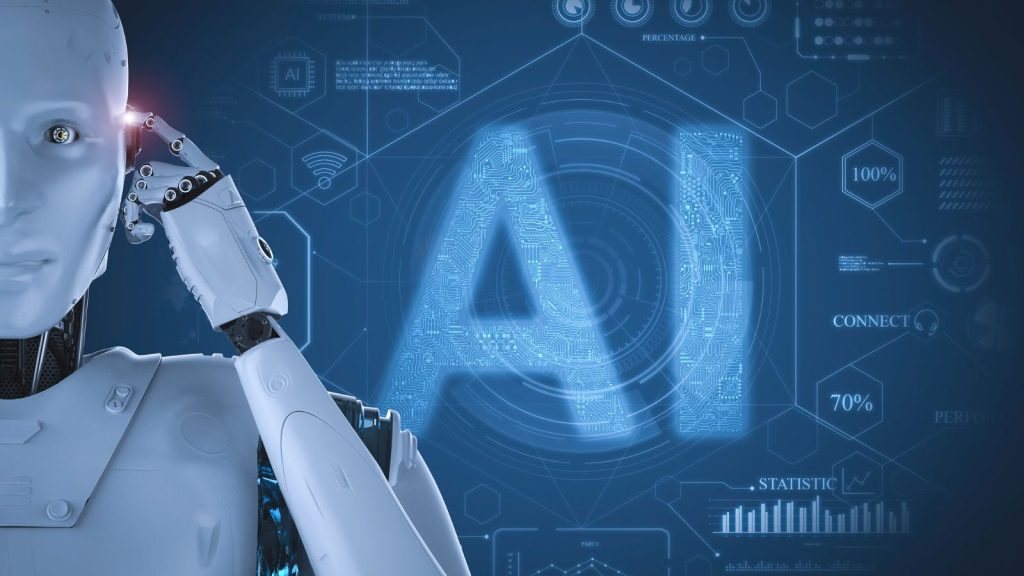

Experts: AI Could Lead To Human Extinction

USA – Artificial intelligence could pose a “Risk of extinction” to humanity on the scale of nuclear war or pandemics, and mitigating that risk should be a “Global priority,” according to an open letter signed by AI Leaders.

-

National2 days ago

National2 days agoQueen’s Staircase Gets $200K Facelift

-

Court3 days ago

Court3 days agoOmar Archer Loses Appeal in Libel Case

-

National3 days ago

National3 days agoResidents React To $165M Royal Beach Club Groundbreaking

-

Court2 days ago

Court2 days agoArrest Warrant Issued for Suspect Accused of 2020 Drive-By Killing

-

National2 days ago

National2 days agoCarolyn Smith’s Journey: From Struggle to Success

-

Court2 days ago

Court2 days agoMom Charged After Beating Child with USB Cord

-

National2 days ago

National2 days ago61-Year-Old Dies In Deadly Collision On Midshipman Road

-

Court3 days ago

Court3 days agoCat Island Man Sentenced to 33 Years for Killing American In 2015